This morning, Vellum.ai said it had closed a $5 million seed round. The company declined to share who its lead investor was for the round apart from noting that it was a multi-stage firm, but it did tell TechCrunch that Rebel Fund, Eastlink Capital, Pioneer Fund, Y Combinator and several angels took part in the round.

The startup first caught TechCrunch’s eye during Y Combinator’s most recent demo day (Winter 2023) thanks to its focus on helping companies improve their generative AI prompting. Given the number of generative AI models, how quickly they are progressing, and how many business categories appear ready to leverage large language models (LLMs), we liked its focus.

According to metrics that Vellum shared with TechCrunch, the market also likes what the startup is building. According to Akash Sharma, Vellum’s CEO and co-founder, the startup has 40 paying customers today, with revenue increasing by around 25% to 30% per month.

For a company born in January of this year, that’s impressive.

Normally in a short funding update of this sort, I’d spend a little time detailing the company and its product, focus on growth, and scoot along. However, as we’re discussing something a little bit nascent, let’s take our time to talk about prompt engineering more generally.

Building Vellum

Sharma told me he and his co-founders (Noa Flaherty and Sidd Seethepalli) were employees at Dover, another Y Combinator company from the 2019-era, working with GPT 3 in early 2020 when its beta was released.

While at Dover, they built generative AI applications to write recruiting emails, job descriptions and the like, but they noticed that they were spending too much time on their prompts and couldn’t version the prompts in production, nor measure their quality. They therefore needed to build tooling for fine-tuning and semantic search as well. The sheer amount of work by hand was adding up, Sharma said.

That meant the team was spending engineering time on internal tooling instead of building for the end-user. Thanks to that experience and the machine learning operations background of his two co-founders, when ChatGPT was released last year, they realized the market demand for tooling to make generative AI prompting better was “going to grow exponentially.” Hence, Vellum.

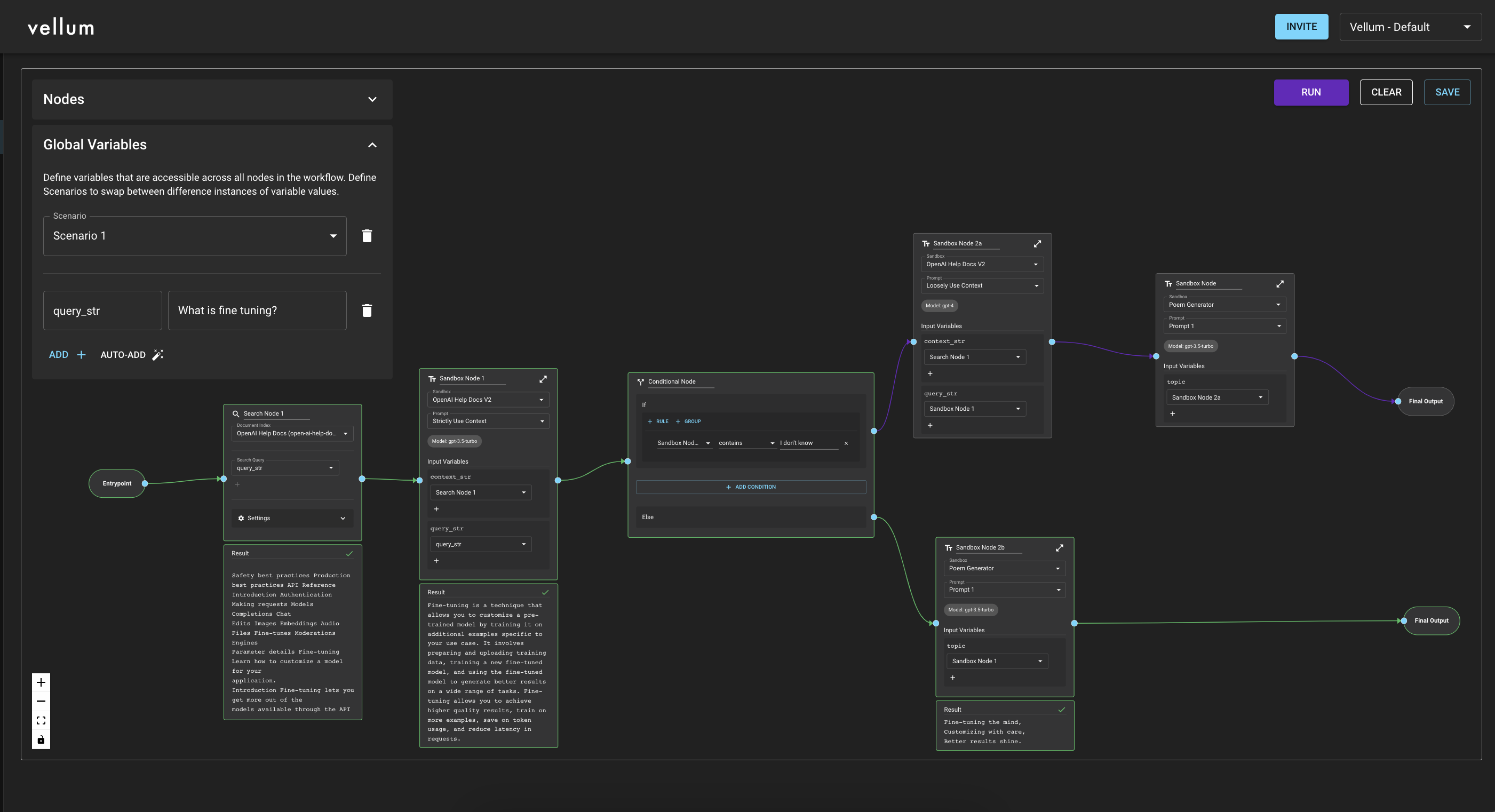

LLM workflows inside of Vellum. Image Credits: Vellum

Seeing a market open up new opportunities to build tooling is not novel, but modern LLMs may not only change the AI market itself, they could also make it larger. Sharma told me that until the release of recently released LLMs “it was never possible to use natural language [prompts] to get results from an AI model.” The shift to accepting natural language inputs “makes the [AI] market a lot bigger because you can have a product manager or a software engineer […] literally anyone be a prompt engineer.”

More power in more hands means greater demand for tooling. On that topic, Vellum offers a way for AI prompters to compare model output side-by-side, the ability to search for company-specific data to add context to particular prompts, and other tools like testing and version control that companies may like in order to ensure that their prompts are spitting out correct stuff.

But how hard can it be to prompt an LLM? Sharma said, “It is simple to spin up an LLM-powered prototype and launch it, but when companies end up taking something like [that] to production, they realize that there are many edge cases that come up, which tend to provide weird results.” In short, if companies want their LLMs to be good consistently, they will need to do more work than simply skin GPT outputs sourced from user queries.

Still, that’s a bit general. How do companies use refined prompts in applications that require prompt engineering to ensure their outputs are well-tuned?

To explain, Sharma pointed to a support ticketing software company that targets hotels. This company wanted to build an LLM agent of sorts that could answer questions like, “Can you make a reservation for me?”

It first needed a prompt that worked as an escalation classifier to decide if the question should be answered by a person or the LLM. If the LLM was going to answer the query, the model should then — we’re extending the example here on our own — be able to correctly do so without hallucinating or going off the rails.

So, LLMs can be chained together to create a sort of logic that flows through them. Prompt engineering, then, is not simply noodling with LLMs to try and get them to do something whimsical. In our view, it’s something more akin to natural language programming. It’s going to need its own tooling framework, similar to other forms of programming.

How big is the market?

TechCrunch+ has explored why companies expect the enterprise generative AI market to grow to immense proportions. There should be lots of miners (customers) who will need picks and shovels (prompt engineering tools) to make the most of generative AI.

Vellum declined to share its pricing scheme, but did note that its services cost in the three to four figures per month. Crossed with more than three dozen customers, that gives Vellum a pretty healthy run-rate for a seed-stage company. A quick uptick in demand tends to correlate with market size, so it’s fair to say there really is strong enterprise demand for LLMs.

That’s good news for the huge number of companies building, deploying or supporting LLMs. Given how many startups are in that mix, we’re looking at bright, sunny days ahead.

Prompt engineering startup Vellum.ai raises $5M as demand for generative AI services scales by Alex Wilhelm originally published on TechCrunch