Ten people were murdered this weekend in a racist attack on a Buffalo, New York supermarket. The eighteen-year-old white supremacist shooter livestreamed his attack on Twitch, the Amazon-owned video game streaming platform. Even though Twitch removed the video two minutes after the violence began, it was still too late — now, gruesome footage of the terrorist attack is openly circulating on platforms like Facebook and Twitter, even after the companies have vowed to take down the video.

On Facebook, some users who flagged the video were notified that the content did not violate its rules. The company told TechCrunch that this was a mistake, adding that it has teams working around the clock to take down videos of the shooting, as well as links to the video hosted on other sites. Facebook said that it is also removing copies of the shooter’s racist screed and content that praises him.

But when we searched a term as simple as “footage of buffalo shooting” on Facebook, one of the first results featured a 54-second screen recording of the terrorist’s footage. TechCrunch encountered the video an hour after it had been uploaded and reported it immediately. The video wasn’t taken down until three hours after posting, when it had already been viewed over a thousand times.

In theory, this shouldn’t happen. A representative for Facebook told TechCrunch that it added multiple versions of the video, as well as the shooter’s racist writings, to a database of violating content, which helps the platform identify, remove and block such content. We asked Facebook about this particular incident, but they did not provide additional details.

“We’re going to continue to learn, to refine our processes, to ensure that we can detect and take down violating content more quickly in the future,” Facebook integrity VP Guy Rosen said in response to a question about why the company struggled to remove copies of the video in an unrelated call on Tuesday.

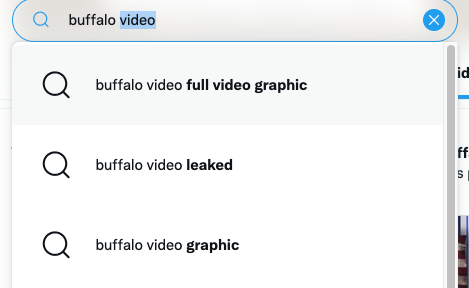

Reposts of the shooter’s stream were also easy to find on Twitter. In fact, when we typed “buffalo video” into the search bar, Twitter suggested searches like “buffalo video full video graphic,” “buffalo video leaked” and “buffalo video graphic.”

Image Credits: Twitter, screenshot by TechCrunch

We encountered multiple videos of the attack that have been circulating on Twitter for over two days. One such video had over 261,000 views when we reviewed it on Tuesday afternoon.

In April, Twitter enacted a policy that bans individual perpetrators of violent attacks from Twitter. Under this policy, the platform also reserves the right to take down multimedia related to attacks, as well as language from terrorist “manifestos.”

“We are removing videos and media related to the incident. In addition, we may remove Tweets disseminating the manifesto or other content produced by perpetrators,” a spokesperson from Twitter told TechCrunch. The company called this “hateful and discriminatory” content “harmful for society.”

Twitter also claims that some users are attempting to circumvent takedowns by uploading altered or manipulated content related to the attack.

In contrast, video footage of the weekend’s tragedy was relatively difficult to find on YouTube. Basic search terms for the Buffalo shooting video mostly brought up coverage from mainstream news outlets. With the same search terms we used on Twitter and Facebook, we were able to identify a handful of YouTube videos with thumbnails of the shooting that were actually unrelated content once clicked through. On TikTok, TechCrunch identified some posts that directed users to websites where they could watch the video but didn’t find the actual footage on the app in our searches.

Twitch, Twitter and Facebook have stated that they are working with the Global Internet Forum to Counter Terrorism to limit the spread of the video. Twitch and Discord have also confirmed that they are working with government authorities that are investigating the situation. The shooter described his plans for the shooting in detail in a private Discord server prior to the attack.

According to documents reviewed by TechCrunch, the Buffalo shooter decided to broadcast his attack on Twitch because a 2019 anti-semitic shooting at Halle Synagogue remained live on Twitch for over 30 minutes before it was taken down. The shooter considered streaming to Facebook but opted not to use the platform because he thought users needed to be logged in to watch livestreams.

Facebook has also inadvertently hosted mass shootings that evaded algorithmic detection. The same year as the Halle Synagogue shooting, 50 people were killed in an Islamophobic attack on two mosques in Christchurch, New Zealand, which streamed for 17 minutes. At least three perpetrators of mass shootings, including the suspect in Buffalo, have cited the livestreamed Christchurch massacre as a source of inspiration for their racist attacks.

Facebook noted the day after the Christchurch shootings that it had removed 1.5 million videos of the attack, 1.2 million of which were blocked upon upload. Of course, this begged the question of why Facebook was unable to immediately detect 300,000 of those videos, marking a 20% failure rate.

Judging by how easy it was to locate videos of the Buffalo shooting on Facebook, it seems the platform still has a long way to go.