Inevitably, large businesses will collect all kinds of sensitive data. Often, that’s personal identifiable data (PII) of their customers and employees, or other information that only a select number of users should get access to. But as the amount of data that large businesses collect increases, manual data discovery and classification can’t scale anymore. With Automatic DLP [Data Loss Prevention], Google recently launched a tool that helps its BigQuery users discover and classify sensitive data in their data warehouse and set access policies based on those discoveries. Automatic DLP was previously in public preview and is now generally available.

“One of the challenges that we see a lot of our customers facing is really around understanding their data so they can better protect it, preserve the privacy of PII for their customers, meet compliance, or just better govern their data,” Scott Ellis, Google Cloud’s product manager for this service, told me. “We really feel that one of the challenges that they face is really just that initial awareness or visibility into their data.”

Ellis noted that the manual processes that many companies had put in place aren’t able to cope with the scale of data that is now coming in. So it takes an automated system to go in and inspect every column for PII, for example, to ensure that this data isn’t unintentionally exposed.

There is also an additional wrinkle here in that a lot of companies also collect large amounts of unstructured data. “One of the biggest challenges we’ve heard from customers is around: when they have a column of email addresses, it’s good to know. Once you know it, you can treat it as that. But when you have unstructured data, it’s a little bit of a different challenge. You might have a note field. It’s super valuable. But every once in a while, somebody puts something sensitive in there. Treating those as a little bit different. Sometimes, the remediation is different for those,” Ellis explained.

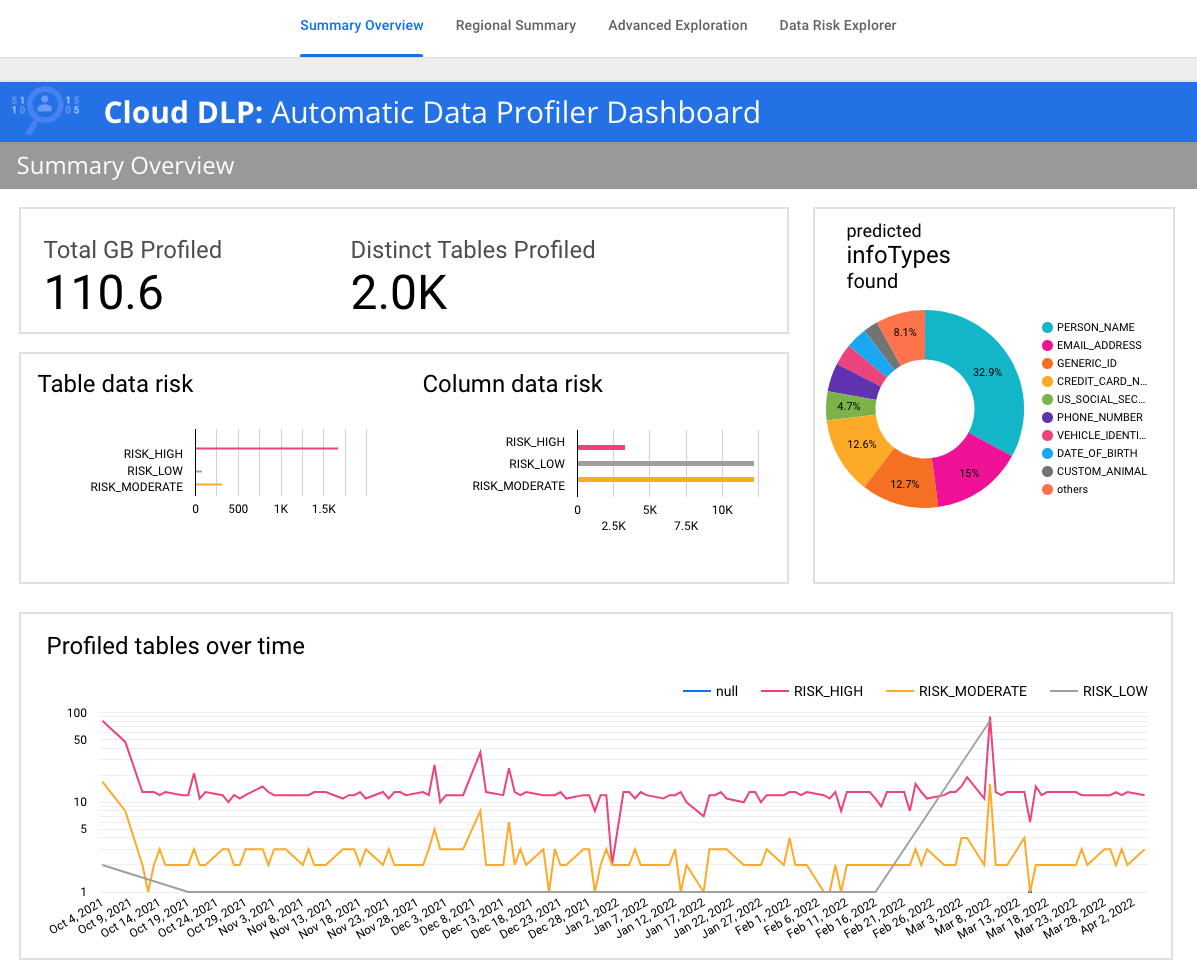

To make it a bit easier to get started with Automatic DLP, the team built a number of new dashboard templates for Google’s Data Studio to give users easier access to an advanced summary and a more graphical investigation tool. They can also use the Google Cloud Console to drill into their data, but that’s not the most user-friendly experience. They can, of course, also take this data to Looker or another BI tool to investigate it, but the team wanted to give users an easy access point to working with their data that encapsulated a lot of its own learnings.

With this release, Google is also giving users new tools to set the frequency and conditions for when their data is profiled. When the service launched, the Google team set the defaults, but in talking to customers, it quickly became clear that there were often use cases where the profiler had to run at different intervals. If somebody changes a table’s schema, for example, one company may want that to be profiled right away and another may want to wait a few days for that table to populate with new data.

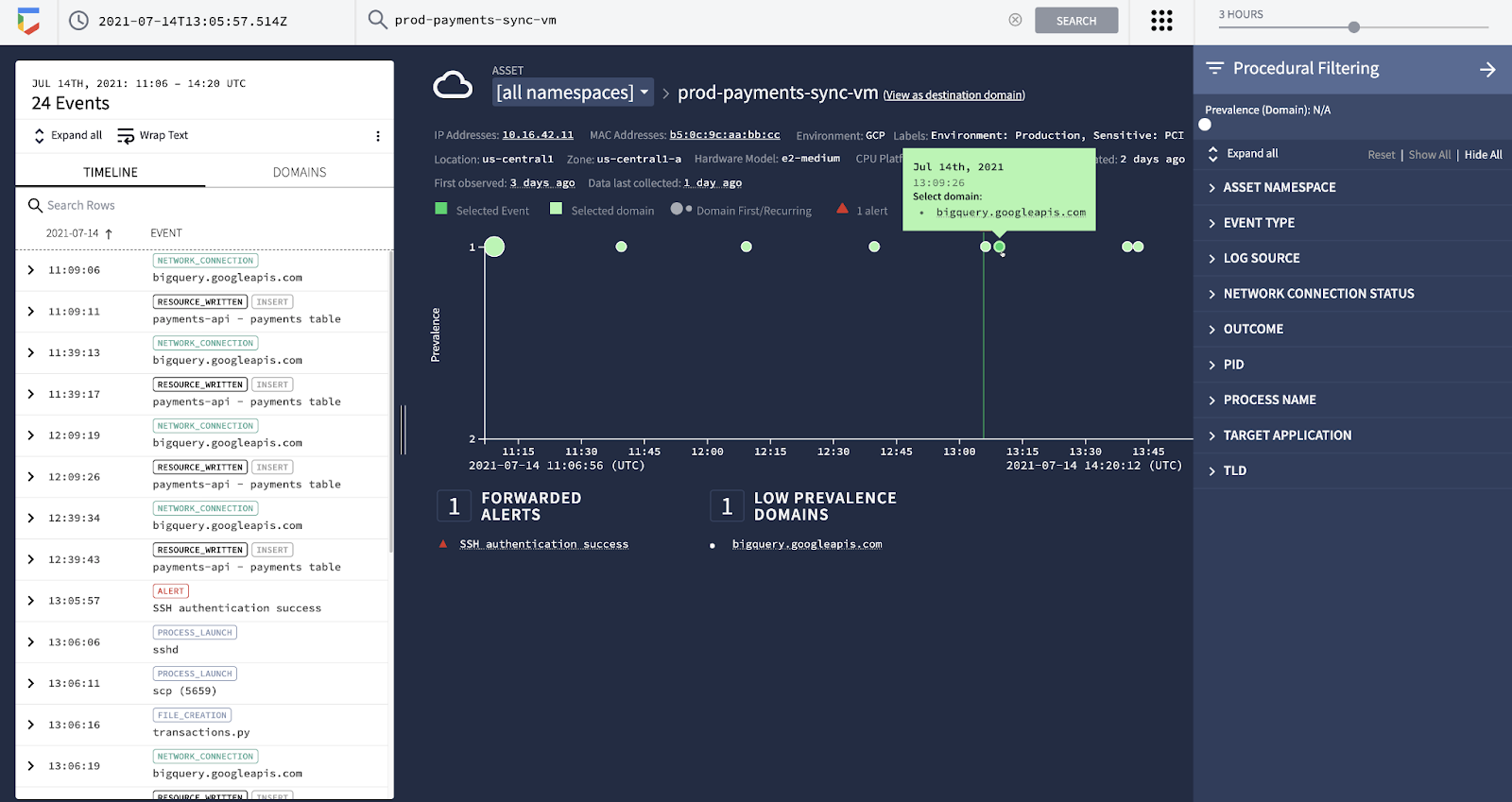

Another new feature the team built is an integration with Chronicle, Google Cloud’s security analytics service. The service can now automatically sync risk scores for every table with Chronicle and the team promises to build additional integrations over time.