Soul Machines, a New Zealand-based company that uses CGI, AI and natural language processing to create lifelike digital people who can interact with humans in real time, has raised $70 million in a Series B1 round, bringing its total funding to $135 million. The startup will put the funds toward enhancing its Digital Brain technology, which uses a technique called “cognitive modeling” to recreate things like the human brain’s emotional response system in order to construct autonomous animated characters.

The funding was led by new investor SoftBank Vision Fund 2, with additional participation from Cleveland Avenue, Liberty City Ventures and Solasta Ventures. Existing investors Temasek, Salesforce Ventures and Horizons Ventures also participated in the round.

While Soul Machines does envision its tech will be used for entertainment purposes, it’s mainly pursuing a B2B play that creates emotionally engaging brand and customer experiences. The basic problem the startup is trying to solve is how to create personal brand experiences in an increasingly digital world, especially when the main interaction most companies have with their customers is via apps and websites.

The answer to that, Soul Machines thinks, is a digital workforce, one that is available at any time of the day, in any language, and mimics the human experience so well that humans have an emotional reaction, which ultimately leads to brand loyalty.

“It’s like talking to a digital salesperson,” Greg Cross, co-founder and chief business officer, told TechCrunch. “So you can be in an e-commerce store buying skincare products, for example, and have the opportunity to talk to a digital skincare consultant as part of the experience. One of the key things we’ve discovered, particularly during the COVID era, is more of our shopping and the way we experience brands is done in a digital world. Traditionally, a digital world is very transactional. Even chatbots are quite transactional – you type in a question, you get a response. What drives us as a company is to think about how do we imagine that human interaction with all of the digital worlds of the future?”

It’s worth noting that Soul Machines’ other co-founder, Mark Sagar, has won Academy Awards for his AI engineering efforts creating the characters in the films “Avatar” and “King Kong.” Perhaps the skill behind producing such realistic digital humans is why Soul Machines reported a 4.6x increase in conversion rate, a 2.3% increase in customer satisfaction and that customers are two times more likely to buy after interacting with one of the company’s products, Yumi, a digital skincare specialist for SK II, a P&G brand.

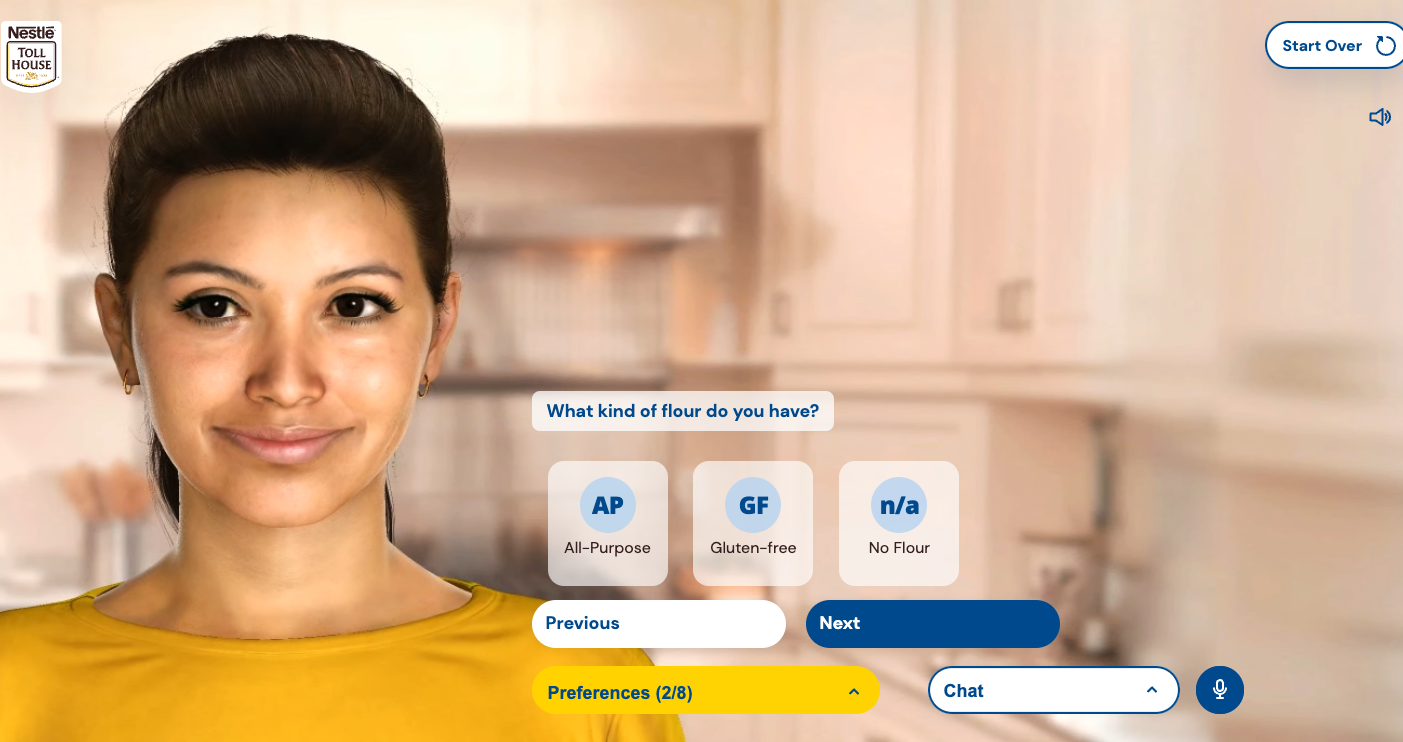

Ruth, the digital cookie coach created by Soul Machines for Nestle Tollhouse.

The startup has worked with brands such as Nestle Tollhouse to create Ruth, an AI-powered cookie coach that can answer basic questions about baking cookies and help customers find recipes based on what they have in their kitchen. Soul Machines also teamed up with the World Health Organization to create Florence, a virtual health worker who is available 24/7 to provide digital counseling services to those trying to quit tobacco or learn more about COVID-19. There’s also Viola, who lives on the company’s website as an example of a digital assistant who can answer questions and interact with content, like YouTube videos or maps, that she pulls up.

For consumers, many digital assistants can feel more like a gimmick than a useful tool. But these assistants allow companies to collect first-party data on their customers, which can be used to acquire and retain customers and add more value, rather than having to spend huge sums of money to buy that data from social media platforms or Google AdWords, Cross said.

While Soul Machines has a clear outline of ways it can enhance the future of customer experience, it still has a ways to go to get the tech where it needs to be. The digital people (or shall we say digital women, because Soul Machines is clearly subscribing to the woman-as-a-servant philosophy) that are in the market now feel like visual chatbots. They seem to be able to only answer scripted questions or questions phrased in a specific way, and they have a few responses that they recycle through.

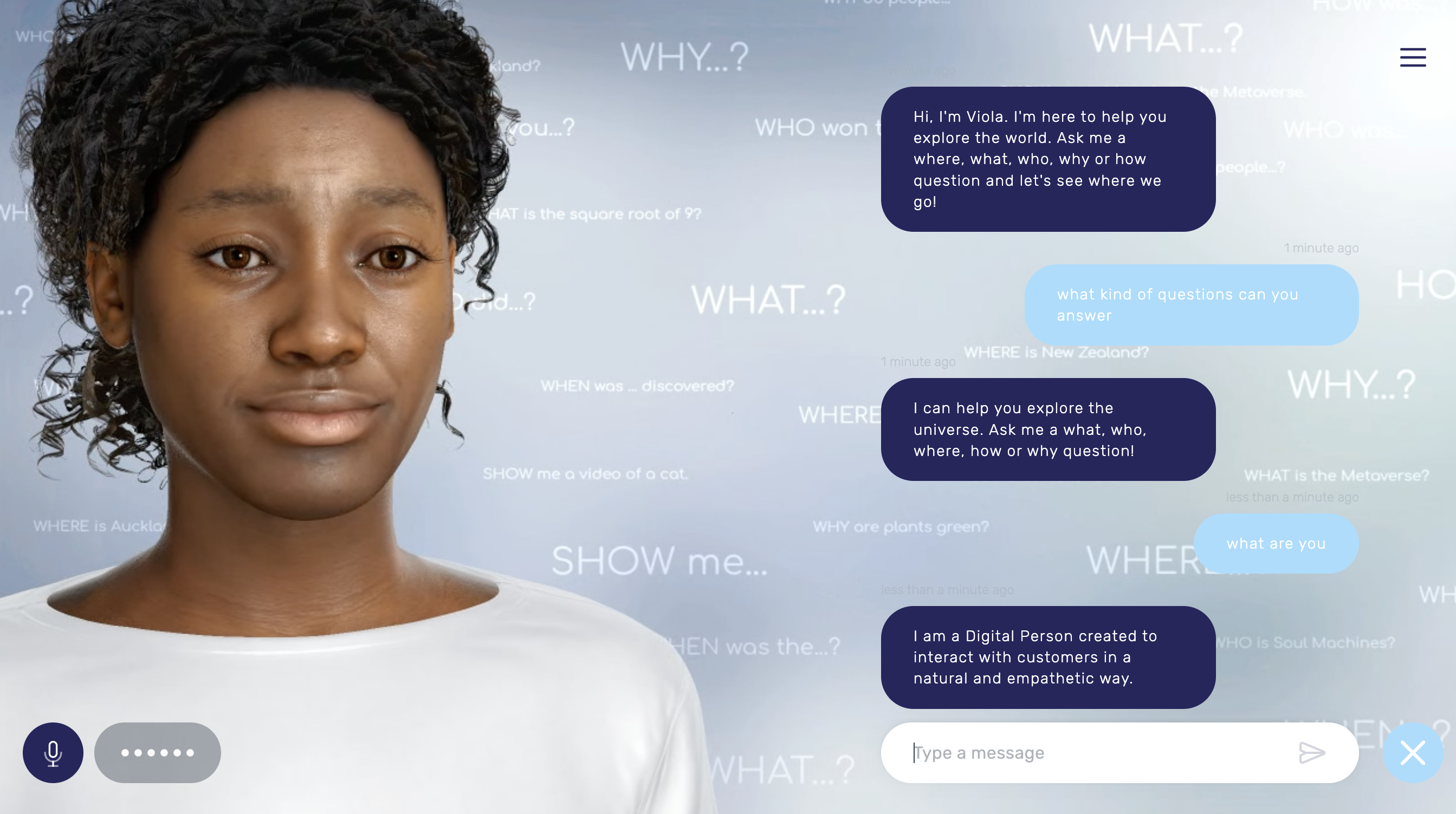

Interacting with Viola, the digital person created by Soul Machines.

For example, Viola introduces herself to the user by saying: “I’m here to help you explore the world. Ask me a Who, What, Where or Why question, and let’s see where we go.”

You can ask Viola what she is, what Soul Machines is, and some other random questions that can be pulled from online encyclopedias like, “Where is New Zealand?” or “What is the Big Bang?” I asked her what cognitive modeling and deep learning were, and she said, “Sorry, I don’t know what that is.”

If the user asks a question that’s not easy to answer, Viola often provides a standard deflection like any basic chatbot. Or, she’ll respond in ways that are surprising, if certainly not intended. For example, I asked Viola: “Why do you look sad?” She responded by pulling up a YouTube video of “I’ll Stand by You” by The Pretenders. Not exactly the answer that I was looking for, but Viola does appear, at least, to interact with the content she brings up by looking at and gesturing toward it. This suggests that Viola is aware of the content in her digital world, Cross said.

Florence, the digital person created by Soul Machines for the World Health Organization, reacts to a human’s smile with a smile of her own.

Florence and Ruth were similarly restricted to phrasing questions in a way they were trained to understand and react to, and answering questions that are within the limits of their operational design domains. For her part, Florence had a decent facial mimicking feature. When I smiled at her, she smiled back, and it was a lovely, genuine-looking smile that actually endeared me to her.

As customers interact with any of Soul Machine’s digital people, information about their facial expressions and the way they react emotionally is collected, anonymized, and used to train the Digital Brain so that it can interpret those responses and provide an appropriate answer.

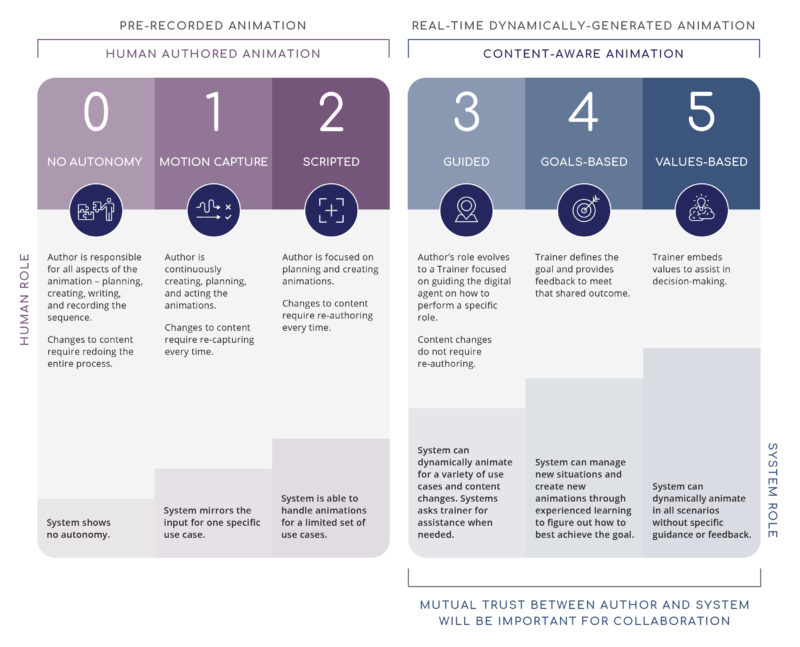

To be able to measure progress in autonomous animation, Soul Machines has written a whitepaper proposing a framework consisting of five-ish levels – there is a Level 0, which is “No Autonomy” — just a recorded animation, like a cartoon.

Image Credits: Soul Machines

Levels 1 and 2 involve pre-recorded and human-authored animation (think how animated characters mimicked the movements of real actors in movies like “Avatar” or “The Lord of the Rings”). Levels 3 through 5 involve real-time, dynamically generated, content-aware animation. Soul Machines puts itself presently at Level 3, or “Guided Animation,” which it defines as a “Cognitively Trained Animation (CTA) system [that] uses algorithms to generate a set of animations without the need for explicit authoring. Authors evolve into trainers solely focused on defining the scope of content and role. The system informs the trainers on areas of improvement.”

Soul Machines is working toward Level 4 autonomy, or “Goals-Based Animation,” which would involve the CTA system generating new animations dynamically to help it reach goals set by the teacher, Cross said. The system tries new interactions and learns from each one under the guidance of a trainer. An example of this could be a virtual assistant providing customers with advice on complex financial situations and creating new behaviors on the fly, but those behaviors would be all in line with branding and marketing goals that are provided by the company.

Or, it could be a company using a digital version of a celebrity brand ambassador to answer questions about its products in a digital showroom. Soul Machines recently announced an intention to build a roster of digital twins of celebrities. Last year, the company started working with basketball player Carmelo Anthony, of the Los Angeles Lakers, to create a digital likeness of him, something it has previously done with rapper Will.I.am, which was featured on a 2019 episode of “The Age of A.I.,” a YouTube Originals series hosted by actor Robert Downey Jr.

Anthony is already a Nike ambassador, so in theory, Soul Machines could use his likeness to create an experience that’s perhaps only available to VIP customers who own a set of NFTs to unlock that experience, Cross said. That digital Anthony might also be able to speak Mandarin or any other language in his own voice, which would open up brands to new audiences.

“We’re really preparing for this next big step from the 2D internet world of today, which I believe will still very much be our on ramp, to the metaverse for the 3D world where digital people will need to be fully animated,” Cross said.

Soul Machines currently has prototypes of digital people that can interact with one another by responding emotionally and answering questions that they’ve asked each other, according to Cross. The co-founder thinks this will be first applied to metaverse spaces like a digital fashion store inhabited by multiple people, some of whom are digital people and some of whom are avatars that are controlled by humans.

In the future, Soul Machines envisions a world where people can create digital replicas of themselves.

“We’re very much on the path to creating at some point in the future these hyper-realistic digital twins of ourselves and being able to train them just by interacting with them online,” Cross said. “And then being able to send them off into the metaverse to work for us while we play golf or lie on the beach. That’s a version of the world of the future.”