As Snap’s creators begin to experiment with the company’s augmented reality Spectacles hardware, the company is delving deeper into juicing the capabilities of its Lens Studio to build augmented reality filters which are more connected, more realistic and more futuristic. At the company’s annual Lens Fest event, Snap debuted a number of changes coming to their lens creation suite. Changes range from efforts to integrate outside media and data to more AR-centric features designed with a glasses-future in mind.

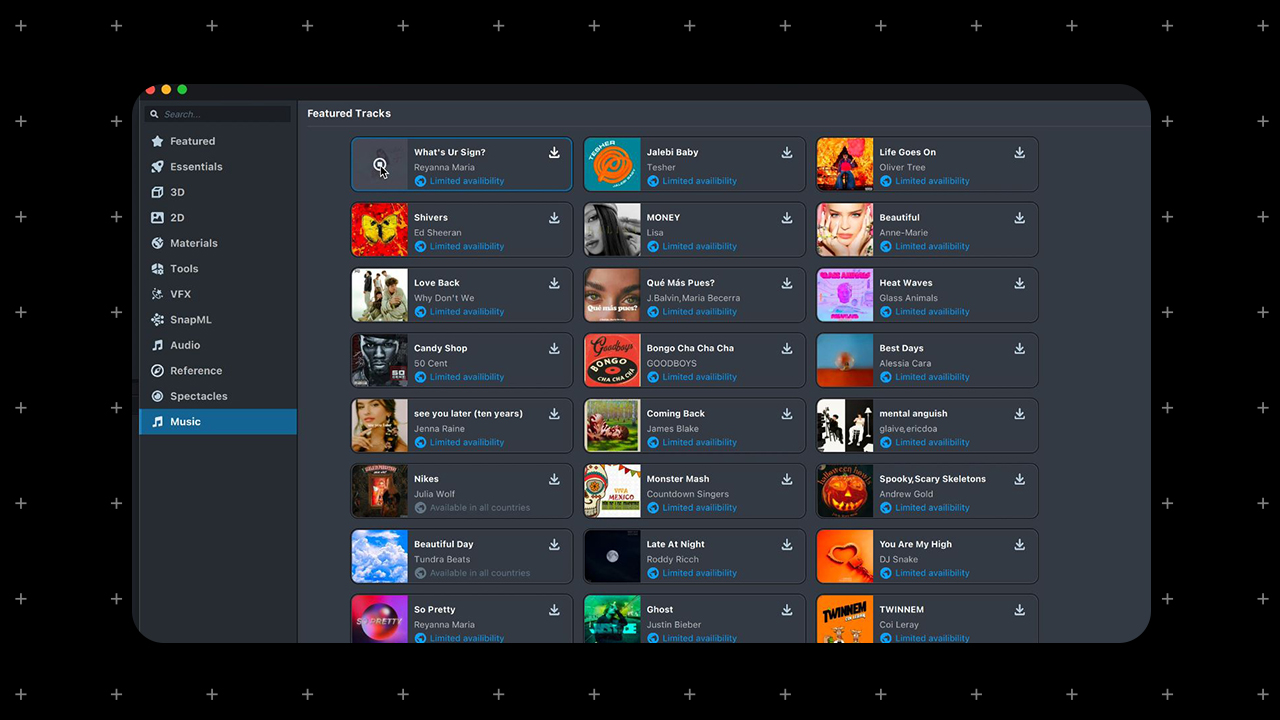

On the media side, Snap will be debuting a news sounds library which will allow creators to add audio clips and millions of songs from Snapchat’s library of licensed music directly into their lenses. Snap is also making efforts to bring real-time data into Lenses via an API library that showcase evolving trends like weather information from Accuweather or cryptocurrency prices from FTX. One of the bigger feature updates will allow users to embed links inside lenses and send them to different web pages.

Image: Snap

Snap’s once-goofy selfie filters remain a big growth opportunity for the company which has long had augmented reality in its sights. Snap detailed that there are now more than 2.5 million lenses that have been built by more than a quarter-million creators. Those lenses have been viewed by users a collective 3.5 trillion times, the company says. The company is building out its own internal “AR innovation lab,” called Ghost, which will help the company bankroll Lens designers who are looking to push the limits of what’s possible, dishing out grants for up to $150k for individual projects.

As the company looks to make lenses smarter, they’re also looking to. make them more technically capable.

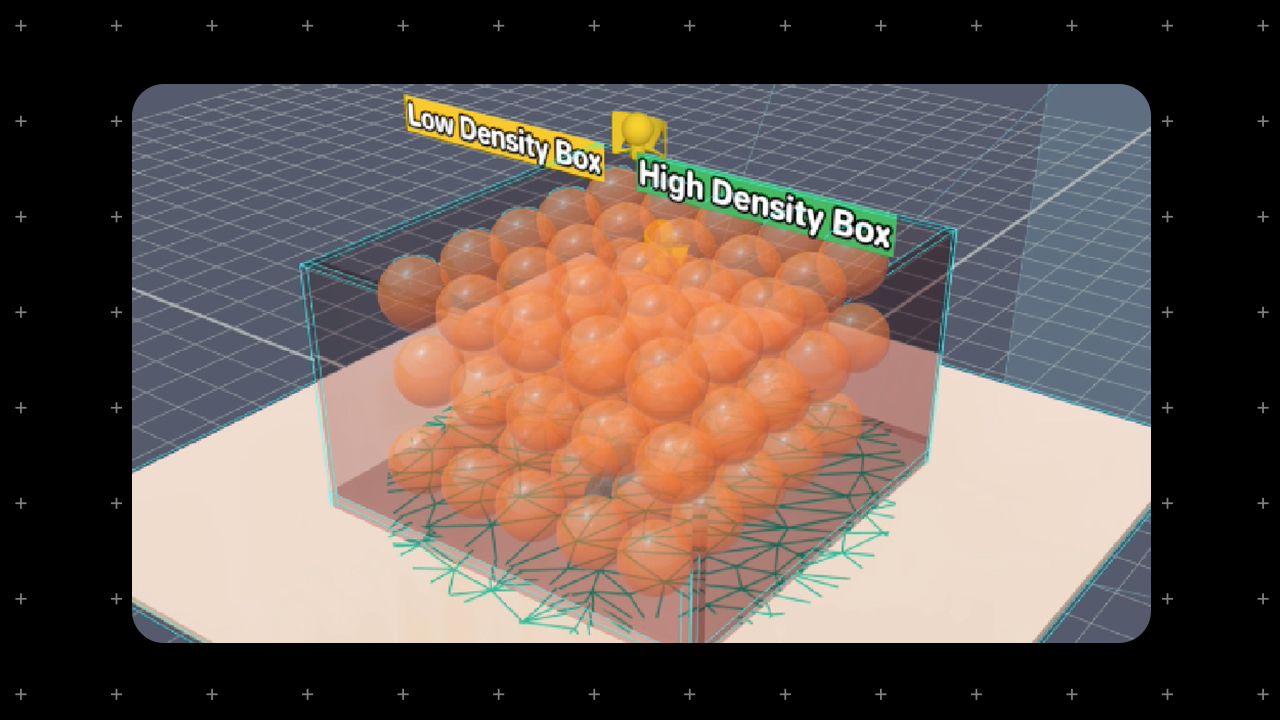

Beyond integrating new data types, Snap is also looking at the underlying AR tech to help make for enjoyable lenses for users with lower-end phones. Its World Mesh feature has allowed users with higher-end phones to leverage AR and view lenses that integrate more real world geometry data for digital objects in a lens to interact with. Now, Snap is enabling this feature across more basic phones as well.

Image: Snap

Similarly, Snap is also rolling out tools to make digital objects react more realistically in reference to each other, debuting an in-lens physics engine which will allow for more dynamic lenses that can not only interact more deeply with the real world but can adjust to simultaneous user input as well.

Snap’s efforts to create more sophisticated lens creation tools on mobile come as the company is also looking to build out more future-flung support for the tools developers may need to design for hands-free glasses experiences on its new AR Spectacles. Creators have been crafting experiences with the new hardware for months and Snap has been building new lens functionality to address their concerns and spark up new opportunities.

Image:Snap

Ultimately, Snap’s glasses are still firmly in developer mode and the company hasn’t offered any timelines for when they might ship a consumer product with integrated AR capabilities, so they theoretically have plenty of time to build to build in the background.

Some of the tools Snap has been quietly building include Connected Lenses which enable shared experiences inside Lenses so multiple users can interact with the same content using AR Spectacles. In their developer iteration, the AR Spectacles don’t have the longest battery life, meaning that Snap has had to get creative in ensuring that Snap’s are there when you need them without running persistently. The company’s Endurance mode allows lenses to continue running in the background off-display while waiting for a specific trigger like reaching a certain GPS location.