On Wednesday, Instagram head Adam Mosseri is set to testify before the Senate for the first time on the issue of how the app is impacting teens’ mental health, following the recent testimonies from Facebook whistleblower Frances Haugen which have positioned the company as caring more about profits than user safety. Just ahead of that hearing, Instagram has announced a new set of safety features, including its first set of parental controls.

The changes were introduced through a company blog post, authored by Mosseri.

Not all the features are brand-new and some are smaller expansions on earlier safety features the company already had in the works.

However, the bigger news today is Instagram’s plan to launch its first set of parental control features in March. These features will allow parents and guardians to see how much time teens spend on Instagram and will allow them to set screen time limits. Teens will also be given an option to alert parents if they report someone. These tools are an opt-in experience — teens can choose not to send alerts, and there’s no requirement that teens and parents have to use parental controls.

The parental controls, as described, are also less powerful than those on rival TikTok, where parents can lock children’s accounts into restricted experience, block access to search, as well as control their child’s visibility on the platform, and who can view their content, comment or message them. Screen time limits, meanwhile, are already offered by the platforms themselves — that is, Apple’s iOS and Google’s Android mobile operating systems offer similar controls. In other words, Instagram isn’t doing much here in terms of innovative parental controls, but notes it will “add more options over time.”

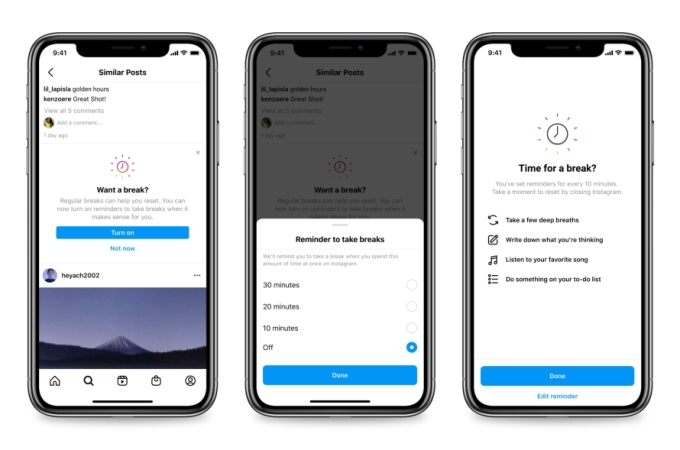

Another new feature was previously announced. Instagram earlier this month launched a test of its new “Take a Break” feature which allows users to remind themselves to take a break from using the app after either 10, 20, or 30 minutes, depending on their preference. This feature will now officially launch in the U.S., U.K., Ireland, Canada, Australia and New Zealand.

Image Credits: Instagram

Unlike on rival TikTok, where videos that push users to get off the app appear in the main feed after a certain amount of time, Instagram’s “Take a Break” feature is opt-in only. The company will begin to suggest to users that they set these reminders, but it will not require they do so. That gives Instagram the appearance of doing something to combat app addiction, without going so far as to actually make “Take a Break” enabled by default for its users, or like TikTok, regularly remind users to get off the app.

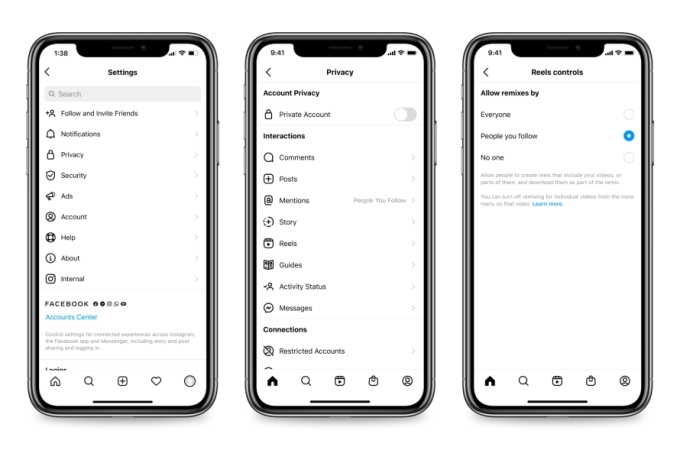

Another feature is an expansion of earlier efforts around distancing teens from having contact with adults. Already, Instagram began to default teens’ accounts to private, restrict target advertising and unwanted adult contact — the latter by using technology to identify “potentially suspicious behavior” from adult users, then preventing them from being able to interact with teens’ accounts. It has also restricted other adult users from being able to contact teens who didn’t already follow them, and sends the teen notifications if the adult is engaging in suspicious behavior while giving them tools for blocking and reporting.

Now it will expand this set of features to also switch off the ability for adults to tag or mention teens who don’t follow them, and to include their content in Reels Remixes (video content), or Guides. These will be the new default settings, and will roll out next year.

Image Credits: Instagram

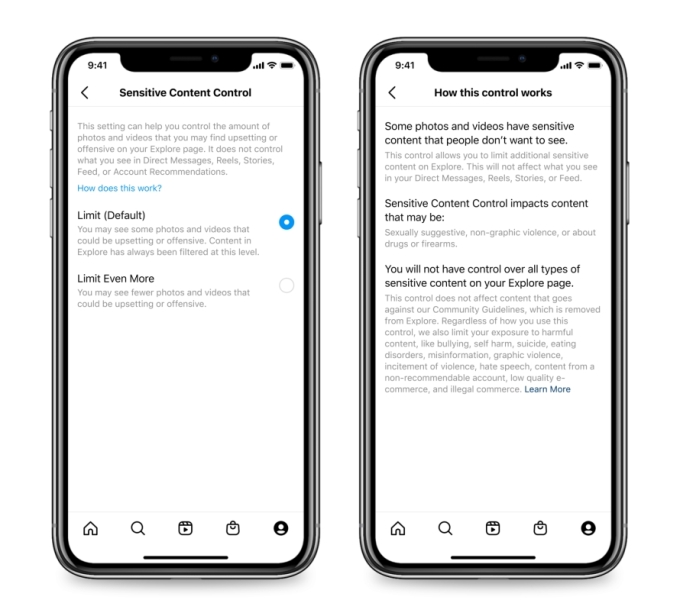

Instagram says it will also be stricter about what’s recommended to teens in sections of the app like Search, Explore, Hashtags, and Suggested Accounts.

But in describing the action it’s taking, the company seems to have not yet made a hard decision on what will be changed. Instead, Instagram says it’s “exploring” the idea of limiting content in Explore, using a newer set of sensitive content control features launched in July. The company says it’s considering expanding the “Limit Even More” — the strictest setting — to include not just Explore, but also Search, Hashtags, Reels and Suggested Accounts.

Image Credits: Instagram

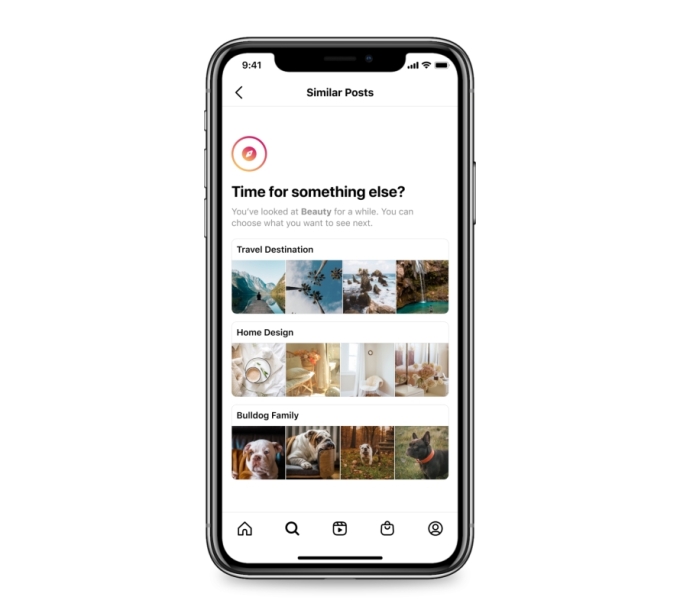

It also says if it sees people are dwelling on a topic for a while it may nudge them to other topics, but doesn’t share details on this feature, as it’s under development. Presumably, this is meant to address the issues raised about teens who are exploring potentially harmful content, like those that could trigger eating disorders, anxiety, or depression. In practice, the feature could also be used to direct users to more profitable content for the app — like posts from influencers who drive traffic to monetizable products, like Instagram Shopping, LIVE videos, Reels, and others.

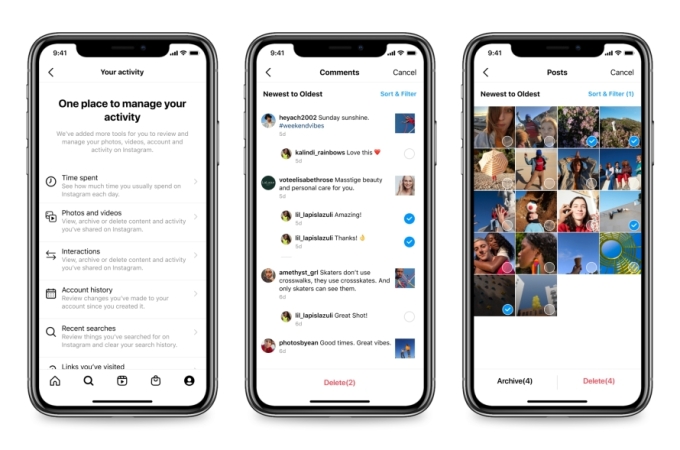

Instagram will also roll out tools this January that allows users to bulk delete photos and videos from their account to clean up their digital footprint. The feature will be offered as part of a new hub where users can view and manage their activity on the app.

Image Credits: Instagram

This addition is being positioned as a safety feature, as older users may be able to better understand what it means to share personal content online; and they may have regrets over their older posts. However, a bulk deletion option is really the sort of feature that any content management system (that’s behaving ethically) should offer its users — meaning not just Instagram, but also Facebook, Twitter and other social networks.

The company said these are only some of the features it has in development and noted it’s still working on its new solution to verify people’s ages on Instagram using technology.

“As always, I’m grateful to the experts and researchers who lend us their expertise in critical areas like child development, teen mental health and online safety,” Mosseri wrote, “and I continue to welcome productive collaboration with lawmakers and policymakers on our shared goal of creating an online world that both benefits and protects many generations to come,” he added.