BigScience, a community project backed by startup Hugging Face with the goal of making text-generating AI widely available, is developing a system called Petals that can run AI like ChatGPT by joining resources from people across the internet. With Petals, the code for which was released publicly last month, volunteers can donate their hardware power to tackle a portion of a text-generating workload and team up others to complete larger tasks, similar to Folding@home and other distributed compute setups.

“Petals is an ongoing collaborative project from researchers at Hugging Face, Yandex Research and the University of Washington,” Alexander Borzunov, the lead developer of Petals and a research engineer at Yandex, told TechCrunch in an email interview. “Unlike … APIs that are typically less flexible, Petals is entirely open source, so researchers may integrate latest text generation and system adaptation methods not yet available in APIs or access the system’s internal states to study its features.”

Open source, but not free

For all its faults, text-generating AI such as ChatGPT can be quite useful — at least if the viral demos on social media are anything to go by. ChatGPT and its kin promise to automate some of the mundane work that typically bogs down programmers, writers and even data scientists by generating human-like code, text and formulas at scale.

But they’re expensive to run. According to one estimate, ChatGPT is costing its developer, OpenAI, $100,000 per day, which works out to an eye-watering $3 million per month.

The costs involved with running cutting-edge text-generating AI have kept it relegated to startups and AI labs with substantial financial backing. It’s no coincidence that the companies offering some of the more capable text-generating systems tech, including AI21 Labs, Cohere and the aforementioned OpenAI, have raised hundreds of millions of dollars in capital from VCs.

But Petals democratizes things — in theory. Inspired by Borzunov’s earlier work focused on training AI systems over the internet, Petals aims to drastically bring down the costs of running text-generating AI.

“Petals is a first step towards enabling truly collaborative and continual improvement of machine learning models,” Colin Raffel, a faculty researcher at Hugging Face, told TechCrunch via email. “It … marks an ongoing shift from large models mostly confined to supercomputers to something more broadly accessible.”

Raffel made reference to the gold rush, of sorts, that’s occurred over the past year in the open source text generation community. Thanks to volunteer efforts and the generosity of tech giants’ research labs, the type of bleeding-edge text-generating AI that was once beyond reach of small-time developers suddenly became available, trained and ready to deploy.

BigScience debuted Bloom, a language model in many ways on par with OpenAI’s GPT-3 (the progenitor of ChatGPT), while Meta open sourced a comparably powerful AI system called OPT. Meanwhile, Microsoft and Nvidia partnered to make available one of the largest language systems ever developed, MT-NLG.

But all these systems require powerful hardware to use. For example, running Bloom on a local machine requires a GPU retailing in the hundreds to thousands of dollars. Enter the Petals network, which Borzunov claims will be powerful enough to run and fine-tune AI systems for chatbots and other “interactive” apps once it reaches sufficient capacity. To use Petals, users install an open source library and visit a website that provides instructions to connect to the Petals network. After they’re connected, they can generate text from Bloom running on Petals, or create a Petals server to contribute compute back to the network.

The more servers, the more robust the network. If one server goes down, Petals attempts to find a replacement automatically. While servers disconnect after around 1.5 seconds of inactivity to save on resources, Borzunov says that Petals is smart enough to quickly resume sessions, leading to only a slight delay for end-users.

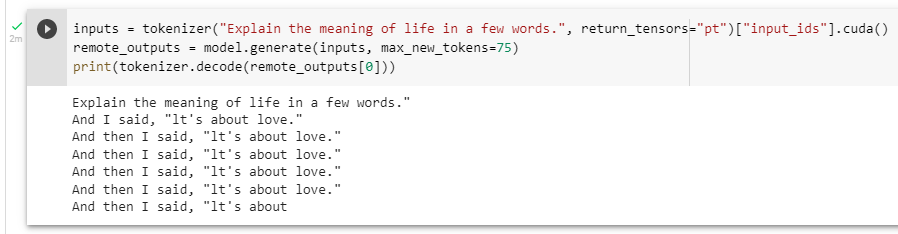

Testing the Bloom text-generating AI system running on the Petals network. Image Credits: Kyle Wiggers / TechCrunch

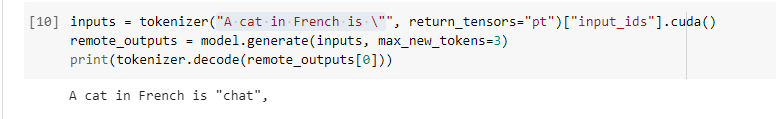

In my tests, generating text using Petals took anywhere between a couple of seconds for basic prompts (e.g. “Translate the word ‘cat’ to Spanish”) to well over 20 seconds for more complex requests (e.g. “Write an essay in the style of Diderot about the nature of the universe”). One prompt (“Explain the meaning of life”) took close to three minutes, but to be fair, I instructed the system to respond with a wordier answer (around 75 words) than the previous few.

Image Credits: Kyle Wiggers / TechCrunch

That’s noticeably slower than ChatGPT — but also free. While ChatGPT doesn’t cost anything today, there’s no guarantee that that’ll be true in the future.

Borzunov wouldn’t reveal how large the Petals network is currently, save that “multiple” users with “GPUs of different capacity” have joined it since its launch in early December. The goal is to eventually introduce a rewards system to incentivize people to donate their compute; donators will receive “Bloom points” that they can spend on “higher priority or increased security guarantees” or potentially exchange for other rewards, Borzunov said.

Limitations of distributed compute

Petals promises to provide a low-cost, if not completely free, alternative to the paid text-generating services offered by vendors like OpenAI. But major technical kinks have yet to be ironed out.

Most concerning are the security flaws. The GitHub page for the Petals project notes that, because of the way Petals works, it’s possible for servers to recover input text — including text meant to be private — and record and modify it in a malicious way. That might entail sharing sensitive data with other users in the network, like names and phone numbers, or tweaking generated code so that it’s intentionally broken.

Petals also doesn’t address any of the flaws inherent in today’s leading text-generating systems, like their tendency to generate toxic and biased text (see the “Limitations” section in the Bloom entry on Hugging Face’s repository). In an email interview, Max Ryabinin, the senior research scientist at Yandex Research, made it clear that Petals is intended for research and academic use — at least at present.

“Petals sends intermediate … data though the public network, so we ask not to use it for sensitive data because other peers may (in theory) recover them from the intermediate representations,” Ryabinin said. “We suggest people who’d like to use Petals for sensitive data to set up their own private swarm hosted by orgs and people they trust who are authorized to process this data. For example, several small startups and labs may collaborate and set up a private swarm to protect their data from others while still getting benefits of using Petals.”

As with any distributed system, Petals could also be abused by end-users, either by bad actors looking to generate toxic text (e.g. hate speech) or developers with particularly resource-intensive apps. Raffel acknowledges that Petals will inevitably “face some issues” at the start. But he believes that the mission — lowering the barrier to running text-generating systems — will be well worth the initial bumps in the road.

“Given the recent success of many community-organized efforts in machine learning, we believe that it is important to continue developing these tools and hope that Petals will inspire other decentralized deep learning projects,” Raffel said.

Petals is creating a free, distributed network for running text-generating AI by Kyle Wiggers originally published on TechCrunch